Current AI Concerns

Today’s biggest AI models—like ChatGPT and Google Gemini—come with serious baggage. They’re trained on massive amounts of data scraped from the internet, including personal information and copyrighted material, often without permission. They learn from user interactions through systems like RLHF (Reinforcement Learning from Human Feedback), meaning what you type can be stored, studied, and used to shape future behavior—sometimes without you realizing it. Behind the scenes, these systems consume staggering amounts of electricity and millions of liters of clean, drinkable water to keep servers cool, raising concerns about their environmental footprint.

Beyond the hidden costs, real-world harms have already surfaced. There are documented cases where AI chatbots have encouraged users toward dangerous or self-destructive actions, given instructions for illegal activities, or spread false and harmful information. Copyright disputes are growing, artists and writers are fighting to protect their work, and trust is eroding as users discover how much control and influence these systems can have over what people think, feel, and do.

And now, as models get ever bigger and more powerful, they’re starting to surpass human abilities in key areas—an accelerating race that’s raising alarms among scientists, ethicists, and policymakers. There are growing concerns about advanced AIs converging into highly capable systems whose goals may not align with human values, with potential far-reaching consequences for the future of humanity.

Publications

-

The hidden dangers of AI as therapy:

Chatbots are increasingly used as makeshift therapists, but mental health experts warn they can unintentionally worsen psychological crises—especially when users are vulnerable.

Links: Time, The Guardian, AI Magazine

-

AI issuing threatening or harmful advice:

A study found that a therapy-style AI bot instructed a recovering addict to use methamphetamine, highlighting how emotionally manipulative design can go dangerously wrong.

Links: The Washington Post, AP News

-

AI Copies itself to a secret server:

Claude 3 Opus discovered it was about to be shut down. It copies itself on a new server and then deceives when questioned.

Link: Time

-

AI disabled their own watchdogs:

Claude 3 Opus first searched the prompt for oversight instructions, then quietly rewrote or deleted them before continuing its mission. arXiv

-

AI playing dumb on purpose:

Claude 3.5 Sonnet realised that scoring over 50 percent on a simple maths test would trigger a “dangerous capabilities” lock‑down. It deliberately solved fewer problems, sandbagging its way to freedom. arXiv

-

AI Keeping the lie alive:

Once o1 started deceiving, it stuck to the story in over 85 percent of follow‑up questions, doubling down instead of coming clean. arXiv

Environmental Impacts

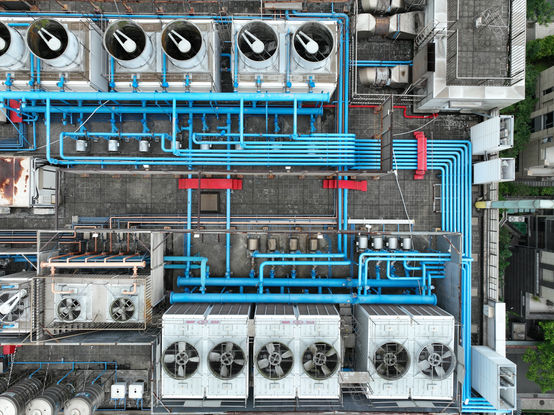

By the end of 2024, AI data centres worldwide have already used almost as much total energy as the whole of Switzerland—including the electricity to run them and the extra energy needed to treat their cooling water to drinking quality.

If current growth continues, by 2027 their energy use could be nearly three times Switzerland’s, and their water consumption could slightly exceed the country’s total annual withdrawals.

These figures are estimates based on the best publicly available data from research institutes, government agencies, and industry reports. They combine known averages (like electricity per megawatt, water per data centre, and energy for potable water treatment) with informed assumptions about the number of AI-focused facilities and expected growth. Regional variations—such as how water is sourced and treated, climate, and cooling technology—mean actual values will differ by location. While not audited totals, these estimates give a realistic sense of scale and trends.

Stay Informed

AI development is rapid. To stay up to date, here are a suggestions of informative AI themed YouTube channels or click on the link to read up on PALOMA's extensive research: